Artificial Intelligence

Generative vs. Discriminative Machine Learning Models: Key Differences and Applications (2025)

Generative vs. Discriminative Machine Learning Models: Key Differences and Applications

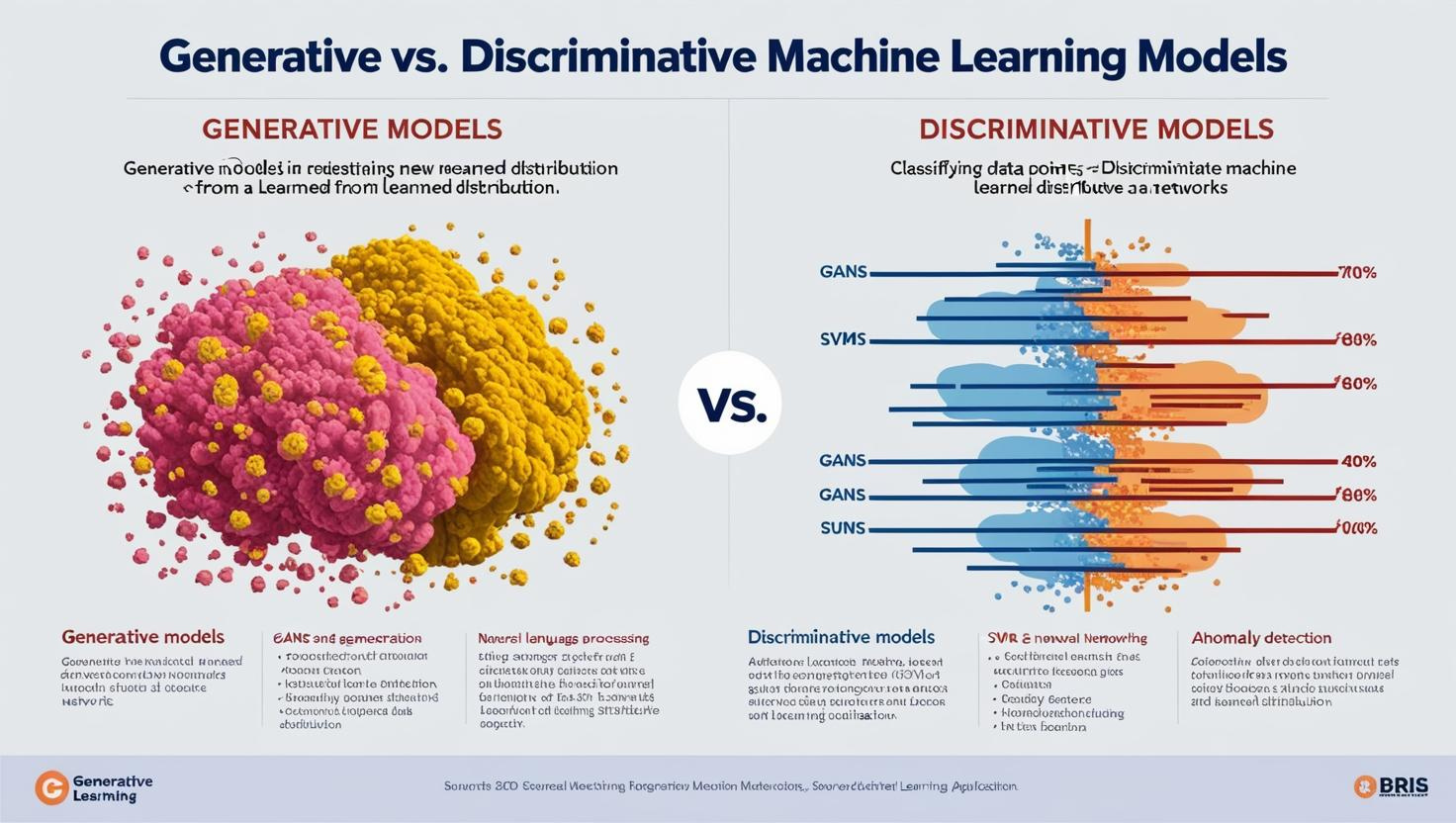

In machine learning, models are generally categorized into two main types: generative and discriminative. But what exactly distinguishes these two categories, and what does it mean for a model to be generative or discriminative?

In simple terms, generative models focus on learning the distribution of the dataset, providing a probability for a given example. These models often predict what might happen next in a sequence. In contrast, discriminative models are used for tasks like classification or regression, returning a prediction based on conditional probability. Let’s delve deeper into the differences between these two types of models and explore when each should be used.

Generative vs. Discriminative Models: Understanding the Key Differences

Machine learning models can be categorized in various ways, including generative models, discriminative models, parametric models, non-parametric models, tree-based models, and non-tree-based models. In this article, we'll focus specifically on generative and discriminative models.

Let’s begin by defining each type of model, then examine examples of each.

Generative Models

Generative models focus on the distribution of classes within the dataset. These algorithms model the distribution of data points and typically estimate joint probability — that is, they determine where a given input feature and a desired output or label exist simultaneously.

Generative models are designed to estimate probabilities and likelihoods, modeling data points and distinguishing between classes based on these probabilities. Since these models learn the probability distribution of the dataset, they can use this information to generate new data instances. Generative models often rely on Bayes' theorem to find joint probabilities (p(x, y)), answering the question:

“What’s the likelihood that this data point belongs to this class or another?”

Some common examples of generative models include:

- Linear Discriminant Analysis (LDA)

- Hidden Markov Models (HMM)

- Bayesian Networks (e.g., Naive Bayes)

Discriminative Models

In contrast, discriminative models focus on learning the boundary between classes within a dataset. Their primary goal is to identify the decision boundary that can be used to classify data instances reliably. Unlike generative models, which model how the data was generated, discriminative models focus on separating classes using conditional probability, without making assumptions about the individual data points.

Discriminative models aim to answer the following question:

“Which side of the decision boundary does this data instance lie on?”

Examples of discriminative models include:

- Support Vector Machines (SVM)

- Logistic Regression

- Decision Trees

- Random Forests

Conclusion

While generative models are focused on understanding and generating the distribution of data, discriminative models are concerned with distinguishing between classes and finding decision boundaries. The choice between these two approaches largely depends on the task at hand. Generative models are often used when you need to generate new data or understand the underlying distribution, while discriminative models are typically more effective for classification and regression tasks, where the goal is to predict the label of a given instance based on its features.

Key Differences Between Generative and Discriminative Models

Here’s a summary of the major distinctions between generative and discriminative models:

Generative Models:

- Objective: Aim to capture the actual distribution of the dataset's classes.

- Probability: Predict the joint probability distribution p(x, y), often using Bayes’ Theorem.

- Computational Cost: Generally more computationally expensive than discriminative models.

- Use Cases: Suitable for unsupervised learning tasks.

- Outlier Sensitivity: More sensitive to outliers compared to discriminative models.

Discriminative Models:

- Objective: Focus on modeling the decision boundary between classes.

- Probability: Learn the conditional probability p(y | x).

- Computational Cost: Typically computationally cheaper than generative models.

- Use Cases: Ideal for supervised learning tasks.

- Outlier Sensitivity: More robust to outliers compared to generative models.

Examples of Generative Models

Here are some well-known examples of generative models:

1. Linear Discriminant Analysis (LDA):

LDA works by estimating the mean and variance for each class in the dataset. Once these statistical measures are computed, predictions are made by estimating the probability that a given set of inputs belongs to a specific class.

2. Hidden Markov Models (HMM):

Markov Chains represent graphs with probabilities indicating the likelihood of transitioning between different states. Hidden Markov Models use unobservable Markov chains, where inputs are given to the model, and the probabilities of the current and previous states are used to calculate the most probable outcome.

3. Bayesian Networks:

Bayesian networks are probabilistic graphical models that represent conditional dependencies between variables using a Directed Acyclic Graph (DAG). The edges of the graph represent conditional dependencies, and nodes represent variables. A Bayesian network captures a subset of independent relationships in a joint probability distribution and is used to estimate the probability of various outcomes. One common type of Bayesian network is Naive Bayes, which simplifies probability calculation by assuming all features are independent from each other.

Examples of Discriminative Models

Here are some common discriminative models:

1. Support Vector Machines (SVM):

Support vector machines work by creating a decision boundary that separates different classes in the dataset. This boundary can be a line in 2D or a hyperplane in higher dimensions (e.g., 3D). The goal of SVM is to find the boundary that maximizes the margin, which is the distance between the boundary and the nearest data points. For datasets that aren't linearly separable, SVM uses the “kernel trick” to identify non-linear decision boundaries, allowing it to handle more complex classification tasks.

2. Logistic Regression:

Logistic regression is a classification algorithm that uses a logit function (log-odds) to calculate the probability of an instance belonging to one of two classes. It applies a sigmoid function to map the probability to a range between 0 and 1. If the probability is above 0.5, the instance is classified as class 1; otherwise, it’s classified as class 0. Logistic regression is typically used for binary classification, but it can be extended to multi-class problems using the one-vs-all strategy, where a separate binary classifier is trained for each class.

3. Decision Trees:

A decision tree works by recursively splitting the dataset into smaller subsets based on certain features until it cannot be split further. The result is a tree structure with nodes and leaves. Nodes represent decisions made on data using different criteria, while leaves contain the classified data points. Decision trees can handle both numerical and categorical data, and the splits are made based on specific variables or features.

4. Random Forests:

A random forest is an ensemble of decision trees, where the predictions of individual trees are combined to make the final decision. The algorithm randomly selects subsets of both observations and features, building separate decision trees from these selections. By averaging the predictions from multiple trees, random forests help improve model accuracy and robustness, reducing the risk of overfitting compared to a single decision tree.