Artificial Intelligence

Advancements in AI-Powered Sign Language Recognition: A 2025 Technical Assessment

When addressing communication barriers, our attention often turns to language translation apps or voice assistants. However, for the millions who rely on sign language, these tools fall short of bridging the divide. Sign language is far more than hand gestures—it is a sophisticated and nuanced form of communication that integrates facial expressions and body language, with each component conveying essential meaning.

The challenge lies in this: unlike spoken languages, which primarily differ in vocabulary and grammar, sign languages across the globe vary fundamentally in how they express meaning. For example, American Sign Language (ASL) features a distinct grammar and syntax that is entirely different from spoken English.

This complexity underscores the difficulty of developing technology capable of recognizing and translating sign language in real time. It demands a deep understanding of a dynamic and intricate language system in action.

A Fresh Approach to Sign Language Recognition

At Florida Atlantic University's (FAU) College of Engineering and Computer Science, a research team decided to chart a new course in sign language recognition. Rather than tackling the full complexity of sign language all at once, they concentrated on a critical first step: achieving unprecedented accuracy in recognizing ASL alphabet gestures using artificial intelligence.

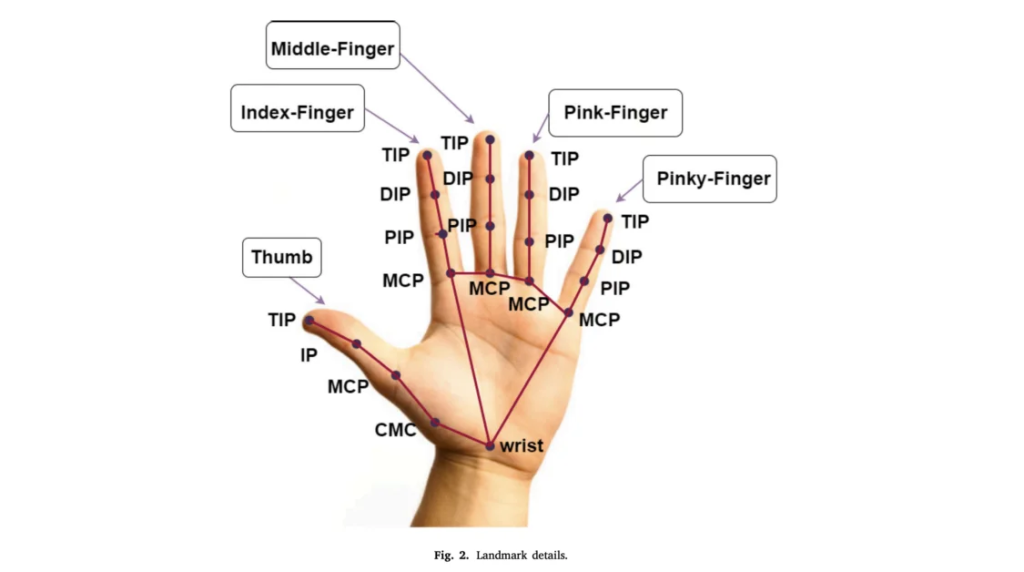

Imagine it as teaching a computer to interpret handwriting, but in three dimensions and in motion. The team developed something extraordinary—a dataset comprising 29,820 static images of ASL hand gestures. However, they didn’t stop at collecting images. Each image was meticulously annotated with 21 key points on the hand, creating a precise map of hand movements and formations for various signs.

“This method hasn’t been explored in prior research,” says Dr. Bader Alsharif, who spearheaded the project during his Ph.D. studies. “It represents a new and promising direction for future advancements.”

Breaking Down the Technology

Let’s explore the technologies that power this innovative sign language recognition system.

MediaPipe and YOLOv8

The system’s effectiveness lies in the seamless integration of two cutting-edge tools: MediaPipe and YOLOv8.

MediaPipe serves as the system’s expert hand observer—a highly skilled interpreter that tracks even the subtlest finger movements and hand positions. The research team selected MediaPipe for its unparalleled accuracy in hand landmark tracking, pinpointing 21 precise points on each hand, as previously described.

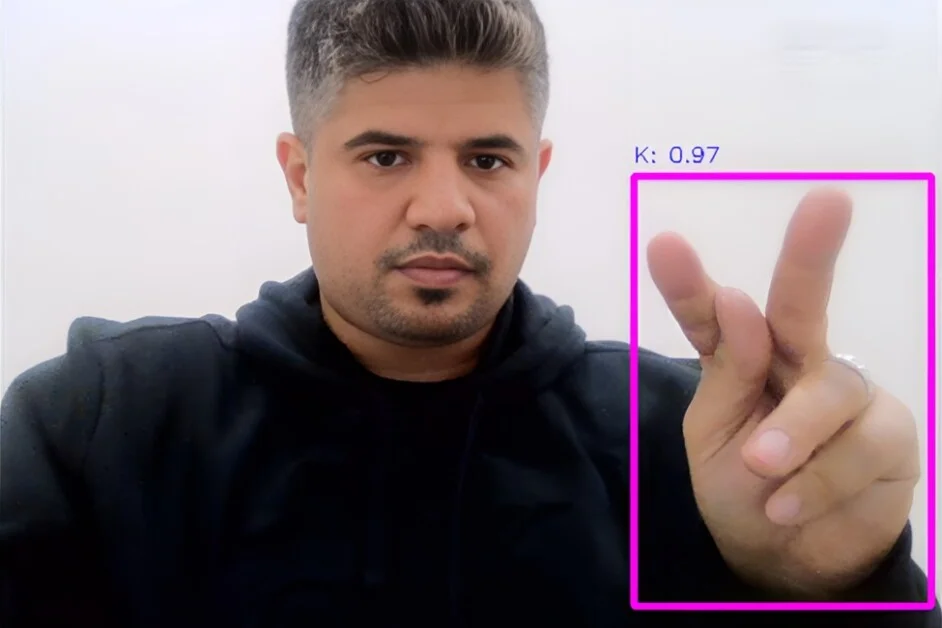

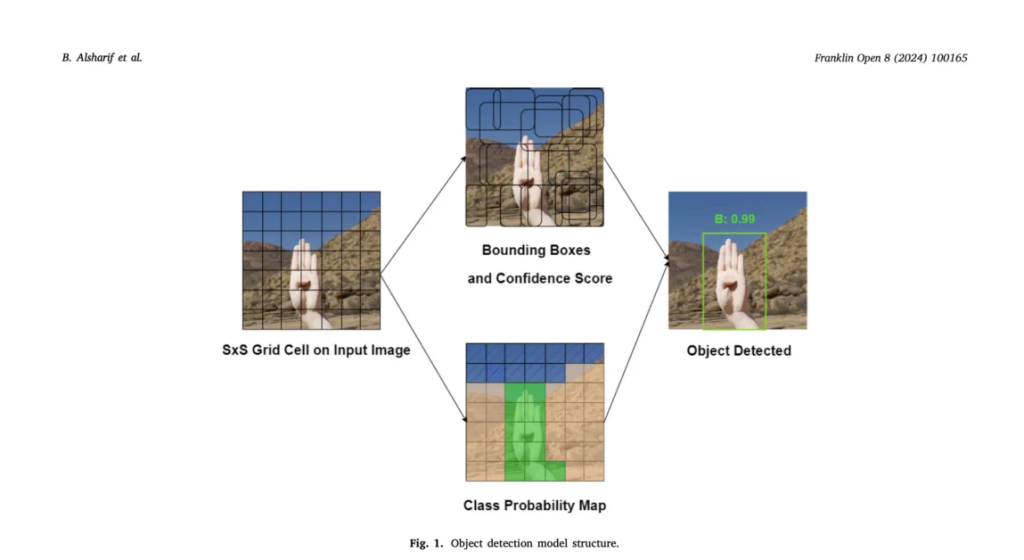

Tracking alone, however, is not enough. Interpreting these movements is where YOLOv8 takes the stage. YOLOv8 excels as a pattern recognition specialist, analyzing the tracked points to identify the corresponding letters or gestures. According to the research, YOLOv8 processes an image by dividing it into an S × S grid. Each grid cell is tasked with detecting objects—such as hand gestures—within its boundaries.

This dynamic combination of tools enables the system to recognize sign language gestures with remarkable precision.

How the System Works

The process behind this sign language recognition system is more intricate than it might initially appear.

Hand Detection Stage

When a sign is made, MediaPipe takes the first step by detecting the hand in the frame and mapping 21 key points. These points aren’t arbitrary—they correspond to specific joints and landmarks on the hand, from the fingertips to the palm base.

Spatial Analysis

Next, YOLOv8 steps in to process this data in real time. For each grid cell in the image, it predicts:

- The probability of a hand gesture being present

- The precise coordinates of the gesture’s location

- The confidence level of its prediction

Classification

To classify the gesture, the system uses a method known as bounding box prediction. Imagine drawing a precise rectangle around the hand gesture. YOLOv8 calculates five critical values for each box:

- The x and y coordinates of the center

- The box’s width and height

- A confidence score indicating the system’s certainty

This layered approach ensures the system captures not just the movement but the meaning behind each gesture with exceptional accuracy.

Why This Combination Works So Well

By integrating MediaPipe's precise hand tracking with YOLOv8's advanced object detection, the research team at FAU achieved results that exceeded expectations. Together, these technologies delivered extraordinary accuracy—98% precision and a 99% F1 score.

This combination excels in handling the intricacies of sign language, distinguishing even subtle differences between gestures that might appear identical to the untrained eye.

Record-Breaking Results

When it comes to evaluating new technology, the key question is always: How effective is it? The team’s rigorous testing revealed remarkable outcomes:

- The system correctly identifies signs 98% of the time.

- It captures 98% of all signs performed.

- It achieves an overall performance score of 99%.

“Results from our research demonstrate our model's ability to accurately detect and classify American Sign Language gestures with very few errors,” explains Dr. Bader Alsharif.

The system proves reliable across various real-world scenarios, including different lighting conditions, hand positions, and users with diverse signing styles.

A Breakthrough in Sign Language Recognition

This innovation pushes the boundaries of what’s possible in sign language recognition, overcoming challenges that have hindered earlier systems. The seamless integration of MediaPipe’s tracking and YOLOv8’s detection created a solution that’s both robust and precise.

“The success of this model is largely due to the careful integration of transfer learning, meticulous dataset creation, and precise tuning,” notes Mohammad Ilyas, a co-author of the study. The team’s meticulous approach has paid off, resulting in a groundbreaking system with exceptional performance.

What This Means for Communication

The success of this system paves the way for exciting advancements in making communication more accessible and inclusive.

The research team isn’t stopping at recognizing individual letters. The next challenge is expanding the system’s ability to interpret a broader range of hand shapes and gestures. For instance, some signs, like the letters ‘M’ and ‘N’ in sign language, appear almost identical. Researchers are working to enhance the system's ability to discern such subtle differences. As Dr. Alsharif explains:

“Importantly, findings from this study emphasize not only the robustness of the system but also its potential to be used in practical, real-time applications.”

Future Goals

The team is now focused on:

- Optimizing the system to run smoothly on everyday devices

- Enhancing its speed for seamless, real-world conversations

- Ensuring reliability across diverse environments

Dean Stella Batalama of FAU’s College of Engineering and Computer Science highlights the broader vision:

“By improving American Sign Language recognition, this work contributes to creating tools that can enhance communication for the deaf and hard-of-hearing community.”

A Step Toward Inclusive Communication

Imagine visiting a doctor’s office or attending a class where this technology bridges communication gaps instantly. The ultimate goal is to make daily interactions smoother and more natural for everyone. By creating tools that facilitate genuine connection, this system is poised to transform communication across education, healthcare, and everyday life, steadily breaking down communication barriers.